We recently announced our partnership with Snapchat. They were really savvy about how they integrated the voice experience. The way it works is when you’re in the lenses section you pop open the camera and all the various filters and lenses are in there. That’s when you say, “Hey, Snapchat…” and by only making the wake word available in the context of that, it helps focus the experience so that people aren’t inclined to ask general purpose questions.

The success of Pandora’s Voice Mode

Bret: So Snapchat’s really interesting. Let’s contrast that with Pandora.

Mike: Everything that Pandora does is voice-enabled. Pandora’s origins are radio, so the difference is you tell Pandora a song that you like and it uses the proprietary Music Genome Project technology to build a radio station based on visit, that song, and your preferences.

It tends to lend itself to things like, “I want to listen to this” or “I want to listen to that”. Then they extended it to moods. “Play me something relaxing” is a way to express a desire for music that doesn’t lend itself as much to a visual interface, because you’re not going to take every single mood and put it in a menu. You can just express yourself and the music plays accordingly. It’s a very powerful extension of music being an emotional service component.

But then it’s got the core stuff. You can say, “Thumbs up the song” and that particular station will play more songs like the one you just gave a thumbs up.

Embedded, edge, and cloud connectivity

Bret: Do you expect to have a growing business around embedded only?

Mike: We do have an embedded version of our technology called Houndify Edge.

Embedded allows you to get a voice response for a product, even if it doesn’t have an internet connection. We also have an optional cloud connection for a variety of things.

We’re starting to see a lot of interest in embedded voice control with an optional cloud connection. You get the benefit of immediacy for a narrow use case that’s command and control where the data component stays on the device—and it’s built-in privacy.

We do have interest and momentum in that space and we expect to see most devices become hybrid where they will choose to just have some inherent functionality on device and then cloud functionality.

As speech platforms have become more optimized and lighter weight, you don’t have to rely exclusively on the cloud to strike the right balance.

Bret: When you think about appliances, people have these in the home for a long time, they tend to have very simple interfaces by design. Where do you see that headed?

Mike: There’s the master-servant approach, which is you have a smart speaker in every room and every device is subservient to that speaker—a hub model. A lot of smart homes are headed that way. So you can program Alexa to enable this or Google to enable that, and users can control their lights through the speaker. It was easier for products that are already internet-enabled to simply take advantage of an API on a local network. But there are some devices that are going to want to have their own interfaces—mainly because they can control a lot more, customize a lot more, and maintain their brand.

Embedded just allows companies to lower costs so that not everything has to be an internet-connected device and it gives them more control over the experience. So we see a future where every device will have a voice interface for people who want to talk directly to it.

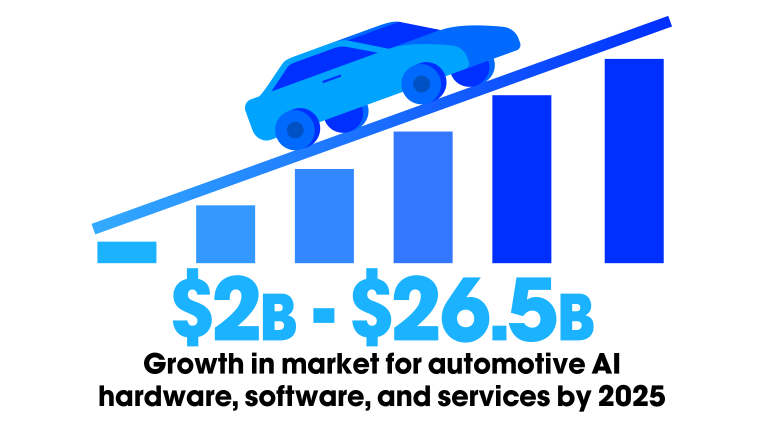

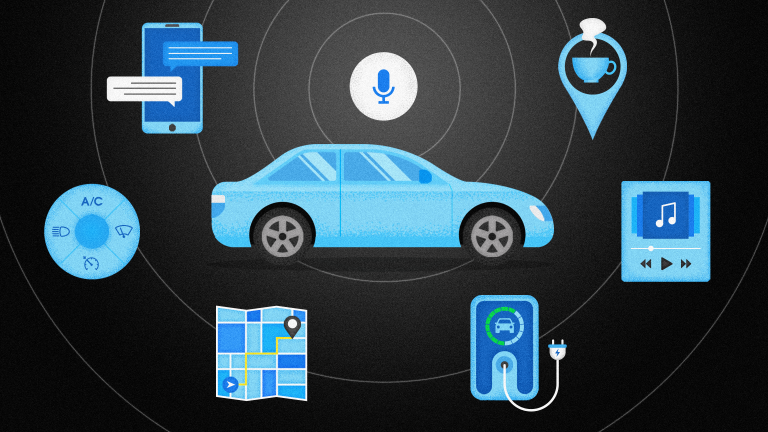

Bret: Yeah, that makes sense. So one of the areas that historically has been completely embedded, but more and more is now cloud and hybrid embedded is automotive. How do you look at automotive differently from some of these other surfaces we’ve just talked about and where do you think that’s headed?

Mike: In the next five years you’re going to see a real distinction between companies who were legacy versus the ones who recognize the shift. They’re building these new IoT products that happen to be cars. Traditional automotive companies need to catch up, get themselves connected, and have software updates. Car companies are really transforming into technology companies and recognize that voice platforms are part and parcel of it.

Best practices for developing a VUI

Bret: Share with our listeners some of the best practices or some of the recommendations you have for how to succeed.

Mike: The first thing is to have a strategy and to carve out the time with the right people in the organization to say, what is our point of view on voice interfaces? Not having a strategy is to guarantee being left behind.

Then the question is, through what platform do you want to deliver? If you want to become purely a content provider, then Amazon’s leading the pack to develop the skills, Google is certainly present, and we have partnerships on our platform through Houndify.

If you have a mobile app, is that the right platform for you to reach people? Or if you have a product, how are you going to move forward on it?

Start to look at how you connect with customers and deliver value across channels to determine what your first step needs to be.

Bret: And what about choosing the tech stack? What are the key things that I need to be thinking about today if I’m going to deploy my own assistant?

Mike: It’s a build-it-yourself, or a build-by-partner strategy. It’s a question of a lot of investment in NLU and engineering support . Do I want to build my own domain using natural language understanding? And, then there’s the partner strategy of what can’t I do or what don’t I want to do that’s going to benefit from a partnership like one with SoundHound.

It could be for parts of the experience—like ASR or wake word—or it can be the whole experience. And obviously the benefit of the whole experience is faster time-to-market and a complete end-to-end tech stack that will vault you into a competitive position much more quickly than if you were building it yourself.

You know, our CEO Keyvan likes to say, “It often takes companies three years to realize it’s going to take 10 years to build their own voice platform.“

Challenges of building an independent voice assistant

Bret: Obviously, you’ve had some people come to you that either tried to build their own assistant. What is it that they’re most likely to struggle with?

Mike: I think it’s really a question of expanding the use cases and providing the robustness that only a very deep, natural language model can actually support. But I think the motivation is really about controlling the end-user experience and having access to the data.