Once you have a first model, you’ll want to improve it. Start by determining exactly what it is you’re trying to improve. Then, add more data, subtract data, or change the features that you’re using to match those goals. For example, if your first model had a high false reject rate, it could indicate that you didn’t have enough variance or variability in your speaker set and that you need to add additional data.

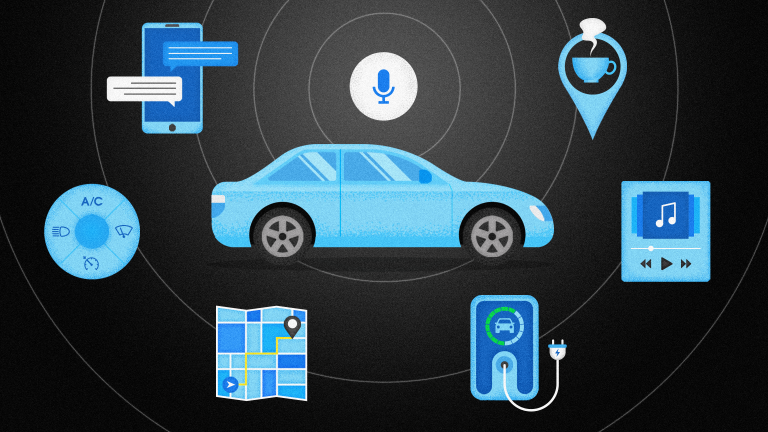

One of the greatest benefits of a branded wake word is in the customization of the experience for users. Honing in on the exact set of speakers who make up your user group, tailoring the experience to match the hardware, service, or app where it will be used, and analyzing and responding to the environment are the elements that make a custom wake word valuable to the user and to the brand.

For many internal teams, collecting all the data from different environments, voice types and accents, and training the models can be significant hurdles to developing and deploying a custom, branded wake word.

At SoundHound, Inc. we’ve had 15 years to perfect our training data and processes. Our teams are experienced at compiling the right data to create custom wake words that result in exceptional customer experiences and positive user feedback.

Explore Houndify’s independent voice AI platform or register for a free account. Want to learn more? Talk to us about how we can help you bring your voice strategy to life.

Kayla Regulski is a technical program manager who loves helping her coworkers succeed as much as she loves a craft beverage after a long run.